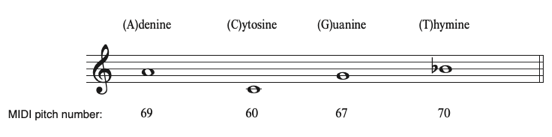

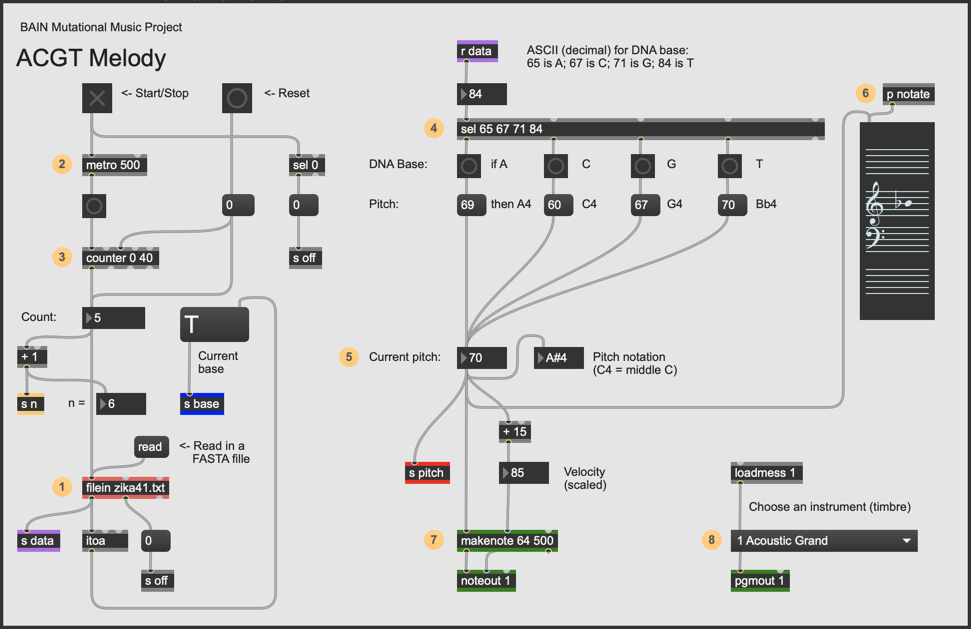

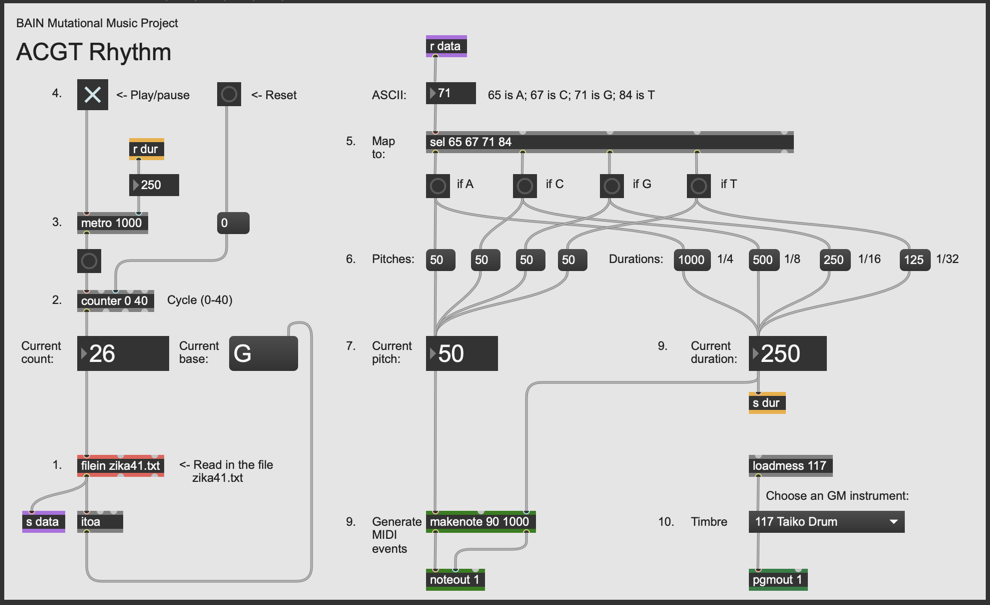

The sonification of a

DNA sequence provides a simple generative model for experimentation in

algorithmic composition. Students must choose the data, mapping

strategy, and musical parameters in a manner that achieves aesthetically

interesting results. In making such choices, students must balance both

scientific and artistic concerns. I have found this to be an efficient

way to introduce the basic principles of parameter mapping sonification

to students that provides them with a conceptual model that allows them

to design their own more rigorous scientific experiments that map

genetic data to sound.

Ballora, Mark. 2014. “Sonification, Science

and Popular Music: In search of the ‘wow’.” Organized Sound

19/1: 30–40.

Barrass, Stephen and Paul Vickers. 2011. “Sonification Design and

Aesthetics”. In The Sonification Handbook, edited by T.

Hermann, A. Hunt, J. G. Neuhoff. Berlin: Logos Verlag, pp. 363–397.

Ben-Tal, Oded and Jonathan Berger. 2004. “Creative Aspects of

Sonification.” Leonardo 37/3 (June 2004): 229–233.

Bovermann, Till, Julian Rohrhuber and

Alberto de Campo. 2011. "Chapter 10. Laboratory Methods for

Experimental Sonfication." In The Sonification Handbook, T.

Hermann, A. Hunt and J. G. Neuhoff, eds. Berlin: Logos Publishing

House. Available online at: <https://sonification.de/handbook/chapters/chapter10/>.

Brooker, Robert J. 2009. Genetics:

Analysis & Principles, 3rd ed. New York: McGraw Hill.

Clark, Mary Ann, and John Dunn. 1999.

“Life Music: The Sonification of Proteins,”

Leonardo 32/1

(February 1999): 25–32. {

Leonardo

Online}

Deamer, David. 1982. “Music: The Arts.” Omni

Magazine (August 1982): 28 & 120.

Deutsch, Diana. 2013. The Psychology

of Music, 3rd. ed. Cambridge, MA: Academic Press.

Dubus, Gaél and Roberto Bresin. 2013. "A

Systematic Review of Mapping Strategies for the Sonification of

Physical Quantities." PLOS ONE 8/12 (December 17, 2013). {PLOS

One}

Edwards, Michael. 2011. "Algorithmic

Composition: Computational Thinking in Music." Communications of

the ACM 54/7: 58–67.

Grond, Florian, and Jonathan Berger. 2011.

"Chapter 15. Parameter Mapping Sonification." In The Sonification

Handbook, T. Hermann, A. Hunt and J. G. Neuhoff, eds. Berlin:

Logos Publishing House. Available online at: <https://sonification.de/handbook/chapters/chapter15/>.

Hall, Rachel Wells. 2008. "Geometrical

Music Theory." Science 320/5874: 328–329. {JSTOR}

Hass, Jeffery. 2021. Introduction to

Computer Music: An Electronic Textbook, 2nd 3d. Bloomington,

IN: Indiana University. Available online at: <https://cmtext.indiana.edu>.

Hayashi, Kenshi and Nobuo Munakata. 1984.

"Basically musical." Nature

310 (July 12, 1984): 96. {Nature}

Hermann, Thomas, A. Hunt and J. G. Neuhoff,

eds. The Sonification Handbook. Berlin: Logos Publishing

House. Available online at: <https://sonification.de/handbook/>.

Jensen, Marc. 2008. "Composing DNA Music

Within the Aesthetics of Chance." Perspectives of New Music

46/2 (Summer 2008): 243–259.

Kramer, et al. 1999. "Sonification Report:

Status of the Field and Research Agenda." Available online at: <http://www.icad.org/websiteV2.0/References/nsf.html>.

LaRue, Jan, 1992/1970. Guidelines for

Style Analysis, 2nd ed. Sterling Heights, MI: Harmonie Park

Press. {GB}

Mathews, Max. 1963. "The Digital Computer

as a Musical Instrument." Science 142/3592 (Nov. 1, 1963):

553–557.

Munakata, Nubuo. 2002. "Individuality,

creativity and genetic information: a prelude to gene music."

Available online at: <http://www.toshima.ne.jp/edogiku/InCrGehtml/AwhtmlInCrGe.html>.

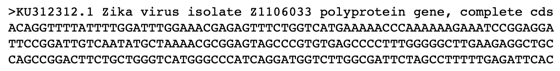

NCBI. 2016. Zika virus isolate

Z1106033 polyprotein gene. Available online at: <https://www.ncbi.nlm.nih.gov/nuccore/KU312312.1>.

Nierhaus, Gerhard. 2008. Algorithmic

Composition: Paradigms of Automated Music Generation. New York:

Springer.

Nyman, Michael. 1999. Experimental

Music: Cage and Beyond, 2nd ed. Cambridge: Cambridge University

Press.

Manzo, V.J. 2016. Max/MSP/Jitter for

Music: A Practical Guide to Developing Interactive Music Systems for

Education and More, 2nd ed. New York, Oxford.

Roads, Curtis. 2015. Composing

Electronic Music: A New Aesthetic. New York: Oxford University

Press.

Scaletti, Carla. 2018. “Sonification (is

not equal to) Music.” In The Oxford Handbook of Algorithmic Music,

edited by Roger T. Dean and Alex McLean. New York: Oxford.

Smalley, Denis. 1997. “Spectromorphology:

explaining sound-shapes.”

Organised Sound 2/2: 107–126. {

Semantic

Scholar}

Stockhausen, Karlheinz and Elaine Barkin. 1962. "The Concept of Unity

in Electronic Music."

Perspectives of New Music 1/1 (Autumn,

1962): 39–48.

Stockhausen, Karlheinz. 1959. "How Time Passes," translated by

Cornelius Cardew.

Die Reihe 3 (Musical Craftsmanship):

10-40.

Supper, Alexandra. 2014. "Sublime

Frequencies: The construction of sublime listening experience in the

sonification of scientific data."

Social Studies of Science

44/1: 34–58.

Taylor, Steven. 2017.

L13 Protein Folding Sonification.

Available online at: <

http://www.stephenandrewtaylor.net/genetics.html>.

Takahashi, Rie and Jeffrey H. Miller.

2007. "Conversion of amino-acid sequence in proteins to classical

music: search for auditory patterns." Genome Biology 8/5,

Article 405 (2007).

Toussaint, Godfried. 2013. The

Geometry of Musical Rhythm: What Makes a "Good" Rhythm Good?,

1st ed. Boca Raton, FL: CRC Press.

Tymoczko, Dmitri. 2011a. A Geometry

of Music: Harmony and Counterpoint in the Extended Common Practice.

New York: Oxford University Press.